ML - How much faster is a GPU?

How much faster is a GPU vs a CPU?

tl:dr

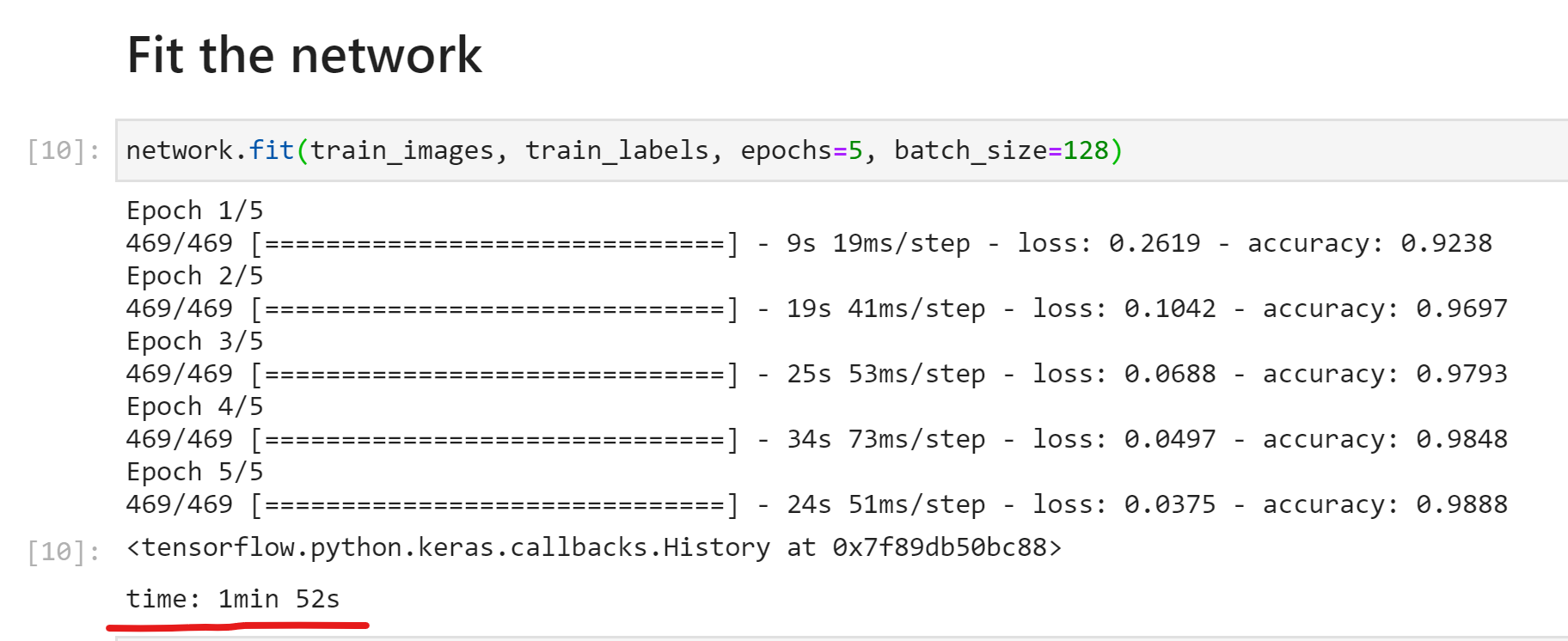

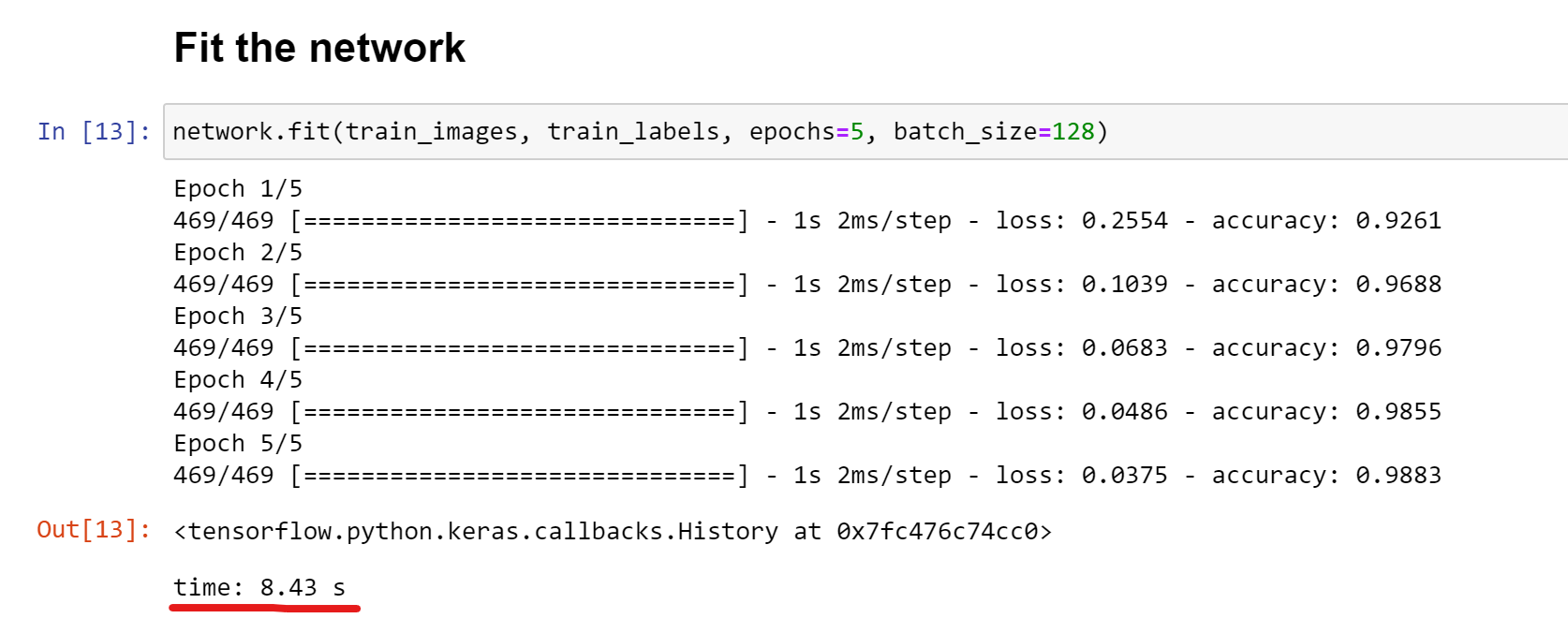

Train a simple number recognition model using the MNIST dataset on a Keras network. Trained once on my laptop’s CPU, and once using a Microsoft Azure VM with 1x NVIDIA Tesla P100 GPU card.

Training time

| CPU | GPU |

|---|---|

|

|

| 1min 52secs | 8.43sec |

The GPU just blew the CPU away, huh?

Experiment description

Everyone studying machine learning has heard that GPU’s are quite popular. These are used to accelerate the execution of complex computation routines, which is helpful for both training and inferencing. However, I wanted to see real-world data about the difference, which you can see above.

The experiment

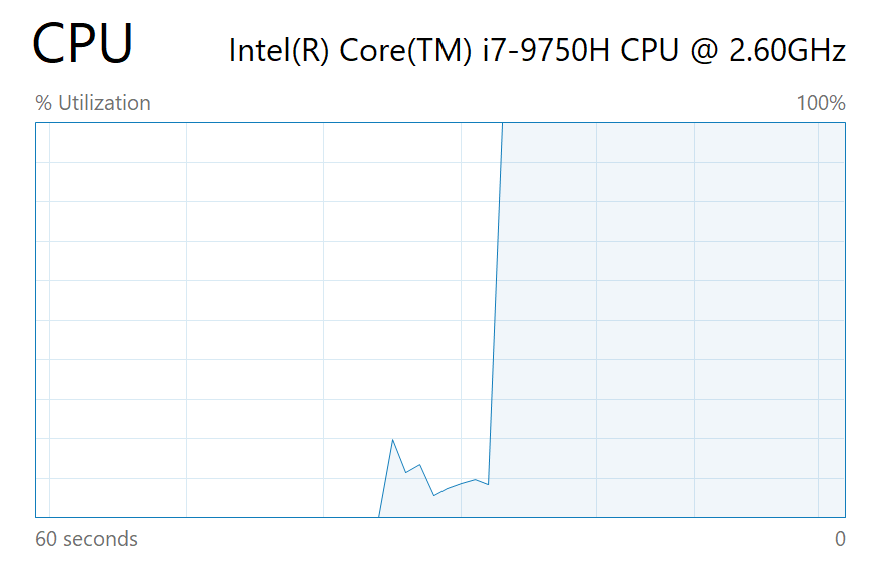

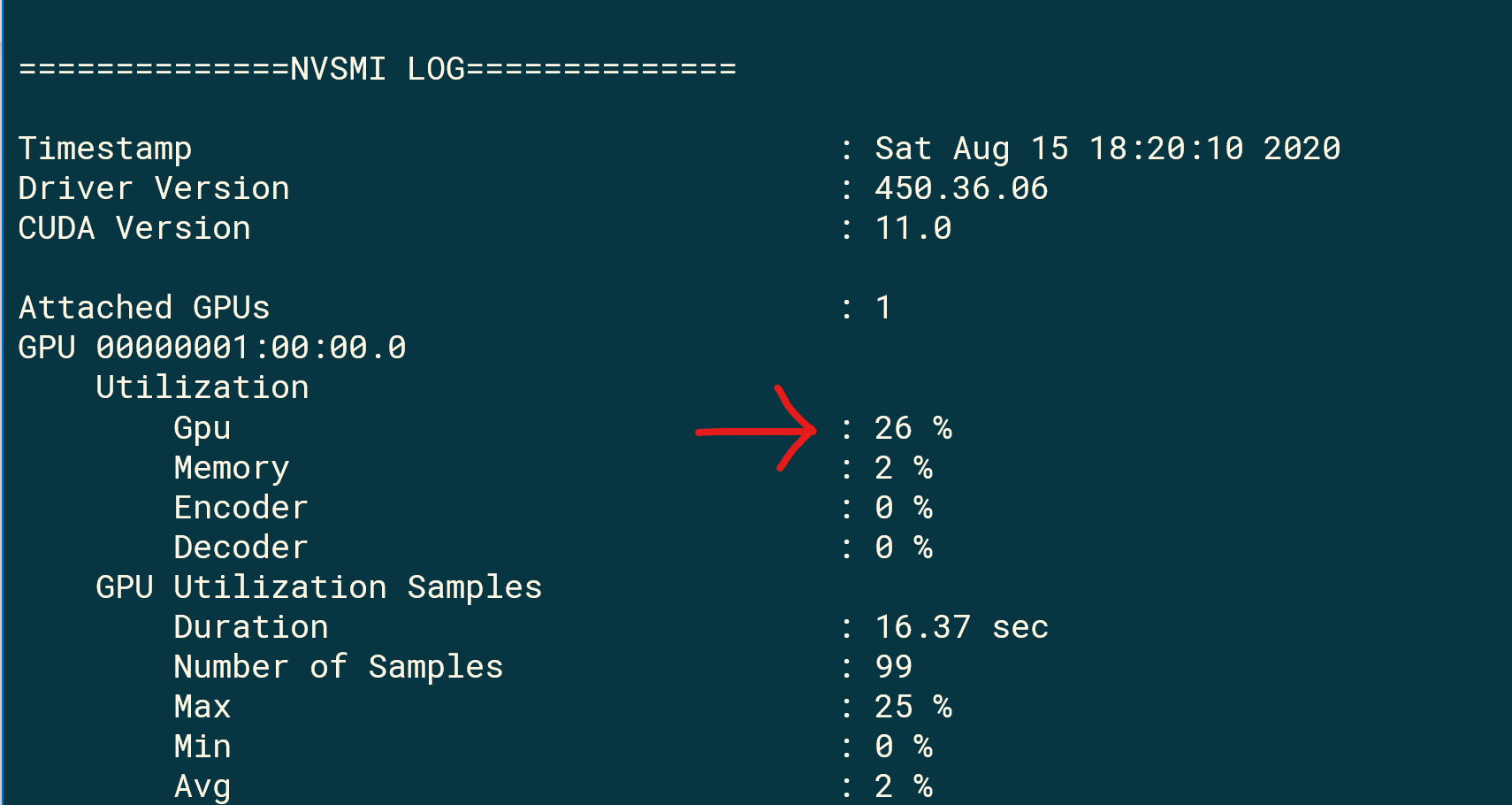

The experiment is the “Hello World” of machine learning: using the MNIST dataset to train a neural network to identify hand-drawn numbers. You can see the actual Jupyter notebook I used HERE. For this experiment, I built an extremely simple network using Keras. I then trained this network on my CPU, and then on my GPU. For those interested, here is the utilization graphs for both of them:

| CPU | GPU |

|---|---|

|

|

As expected, the CPU peaks at 100% CPU during the execution of the training routine. On the other hand, the GPU peaked at 26% (I don’t think the experiment lasted long enough to saturate the GPU…)

How do I get access to a GPU?

I ran the GPU experiment on an Azure Virtual Machine. Microsoft has been kind enough to prepare a Data Science VM image for us, which already includes most of the software needed to perform DS tasks. Use this image instead of a plain-vanilla Ubuntu and save hours of your life. It also already includes the integration needed for Python code to leverage a GPU (using NVIDIA’s CUDA driver).

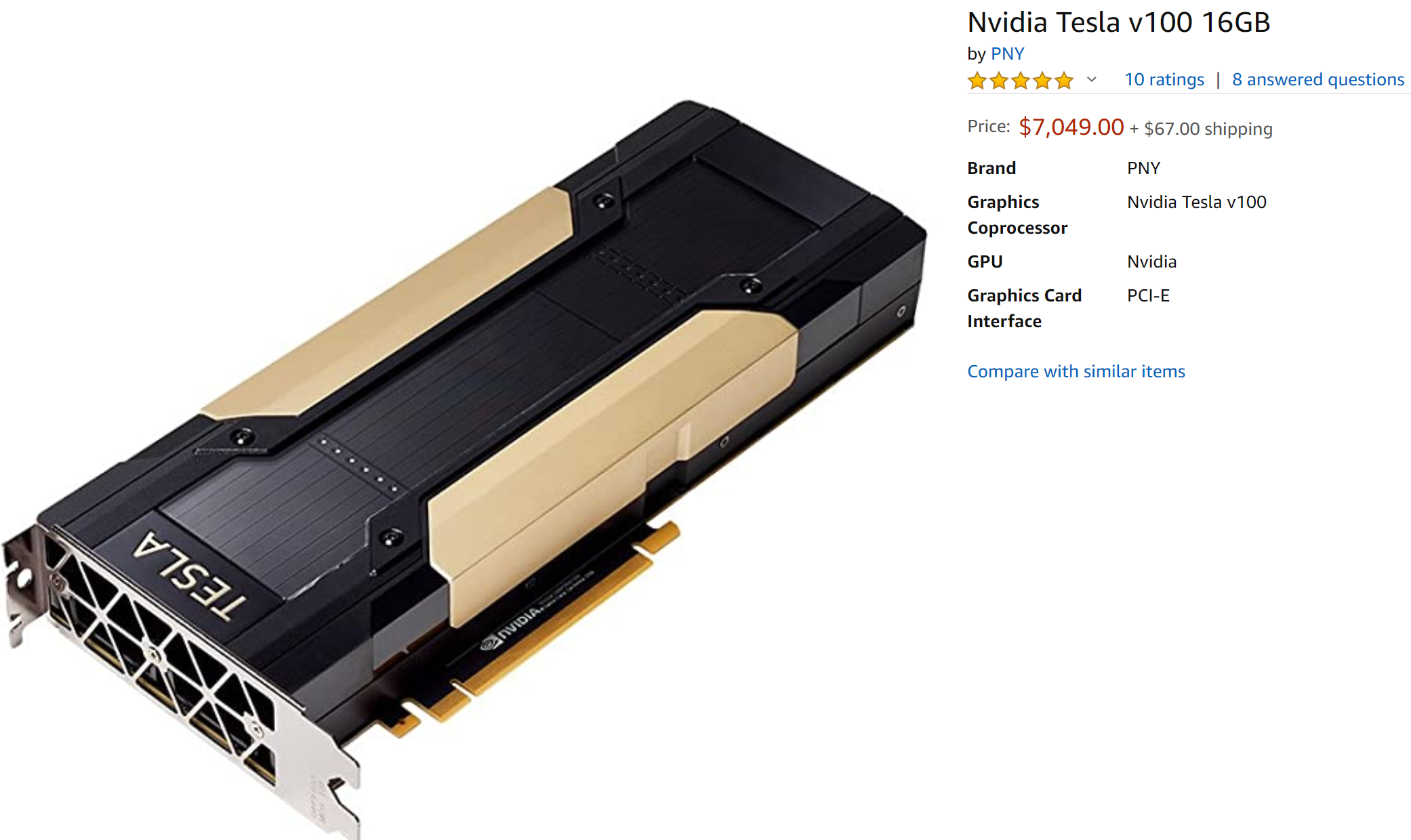

The only thing you need to do is to make sure that you run the VM in an SKU which has a GPU. These VMs have up to 4 NVIDIA Tesla P100 GPUs attached to them. Mind you, that the Tesla P100 retails for 7’000 US Dollars!

Ouch.

Yet you get access to this powerhouse for only 90 cents an hour. Sweet huh?

If you are not hooked into the Azure network, Amazon EC2 also has GPU VM’s available by the hour and Google Compute Cloud also has similar offerings for you.

Of course, the actual benefits of running a GPU greatly vary depending on what you are doing. Your dataset, the optimizer you are using, the architecture of your network, the hyperparameters you are using. But if you were looking for some hard numbers about CPU vs GPU, here you have a sample.

In a future post, I will compare a CPU and a GPU when performing a GridSearch to optimize a model’s hyperparameters. Stay tuned.