Chat with your data: Anatomy of an LLM Enterprise Integration Architecture

Our use case

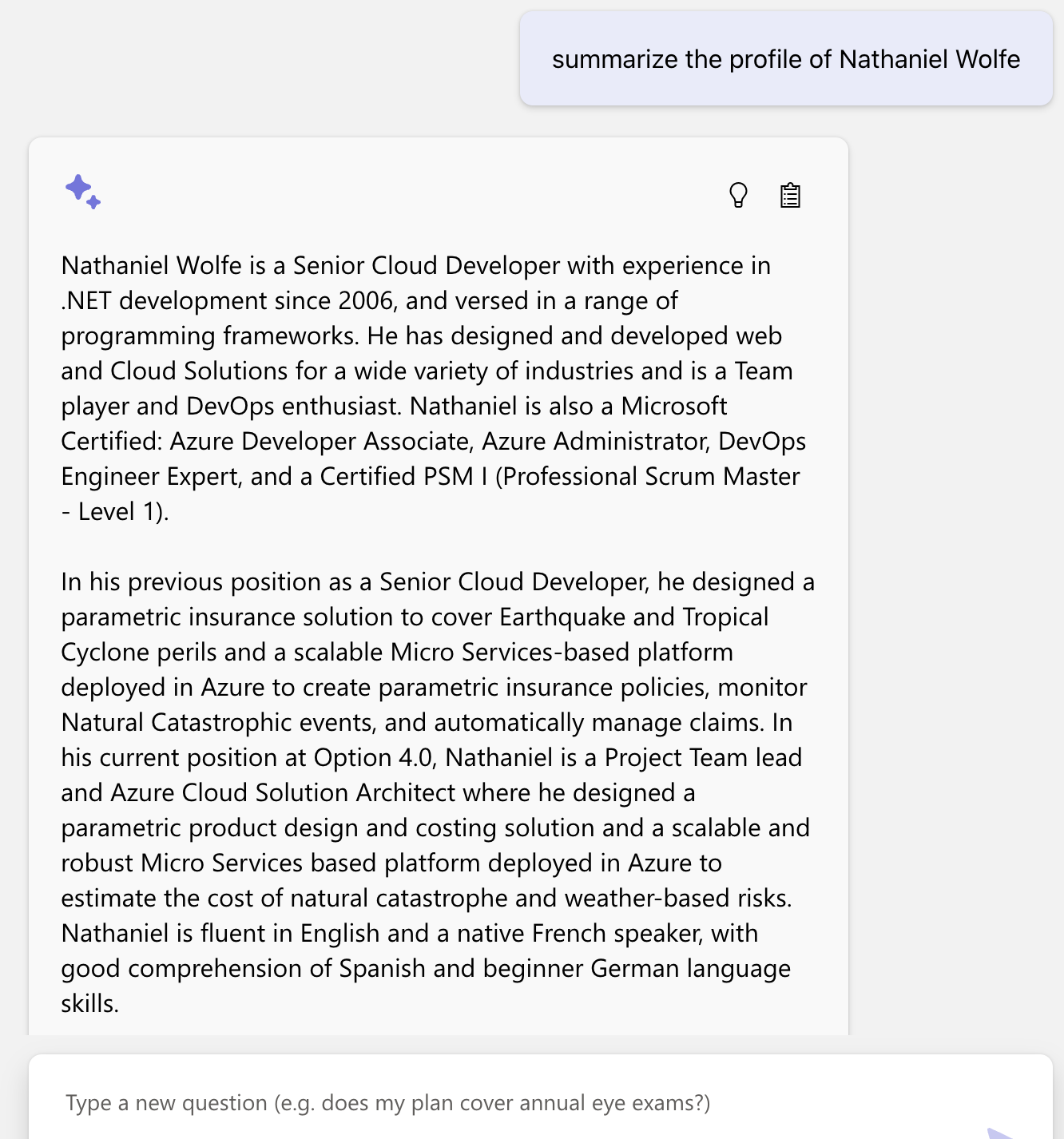

In this blog post, we will propose an architecture to get a GPT model interacting with company data. This is the problem we want to solve:

Given the company’s CVs, find people that have a certain skill

As a data source, we will use the CVs of some employees of Option 4.0. To protect their privacy, we have substituted their names with fake ones.

What a GPT model means for your Enterprise

The name GPT stands for Generative Pretrained Transformer. The first two words are key for what we intend to achieve here.

Generative

Means that it generates something. In our case, an LLM (Large Language Model) generates text. It can be code, or it can be conversational English. When presented with a written prompt, it will generate text in a familiar manner, based on the millions of times it has seen humans write similar text online. Given a text, it will ripple through the neural net (the transformer) and get the next word, one word at a time. It’s shallow and broad. This is key for you to know because an LLM does not understand the data that you give to it. That has important implications on how to make our enterprise data available to it. More on that later.

LLMs do not tell you the answer to your question. They tell you “when people ask questions like that, this is what the answers that other people tend to give tend to look like”

- Benedict Evans

Pretrained

Contrary to what many people think, you do not train the model on your specific data. Even if you could train the model with your enterprise data, this would not make sense. These models are general-purpose models. They are broad and shallow. They are not intended to be trained for specific tasks (like your Sales data, or Marketing plans). Instead, you want the model to speak human language with you. If you pretrained the model with your enterprise data, you wouldn’t be able to speak English to it, which defeats the purpose. A pretrained model already has the network, weights and configuration which it needs to do its job: speak conversational language. It’s ready to roll. This is a good thing. We don’t want to be training models:

If AI assistants are to play a more useful role in everyday life, they need to be able not just to access vast quantities of information but, more importantly, to access the correct information. Given the speed at which the world moves, that proves challenging for pretrained models that require constant compute-intensive retraining for even small changes.

- Retrieval Augmented Generation, Meta AI

Architecture of an enterprise integration

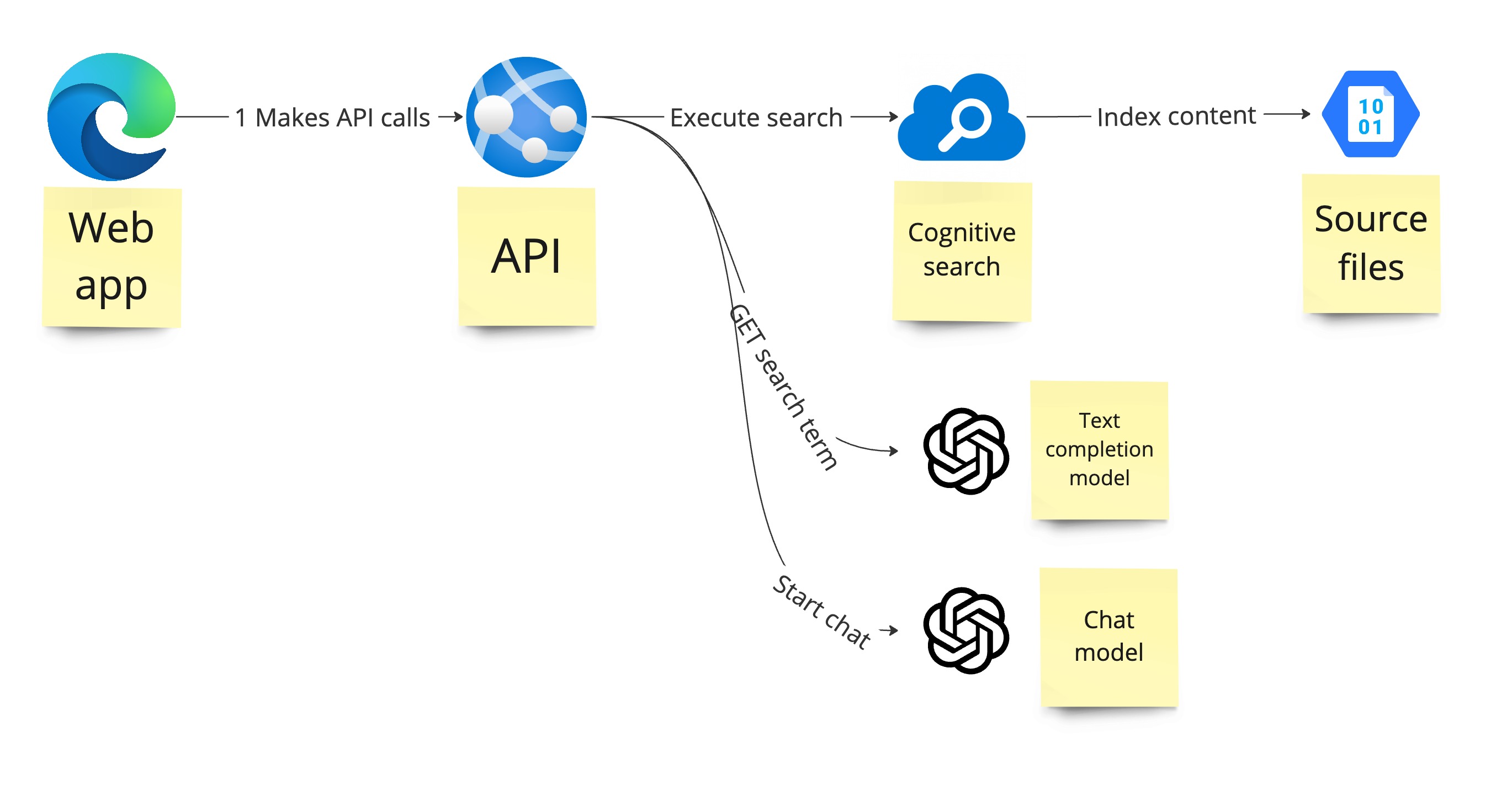

To integrate an LLM with your enterprise data, we need a couple of components:

- Your data sources. For this article, we will use textual data (CVs from employees). However, this can be extended to include structured data as well, such as data from databases. We will elaborate on how to work with structured data in a later post.

- An LLM deployment (or more than one, as we will see later).

- An indexing service which can extract contextual information from your data sources.

- An API that can orchestrate the process of interacting with your indexing service and the LLMs.

- A frontend to interact with the user, such as a webapp or a chat agent in MS Teams. In this post, we will work with a custom-built frontend app.

- (Optional) Additional components can be added, such as a database to persist the history of chats.

The following diagram illustrates the architecture:

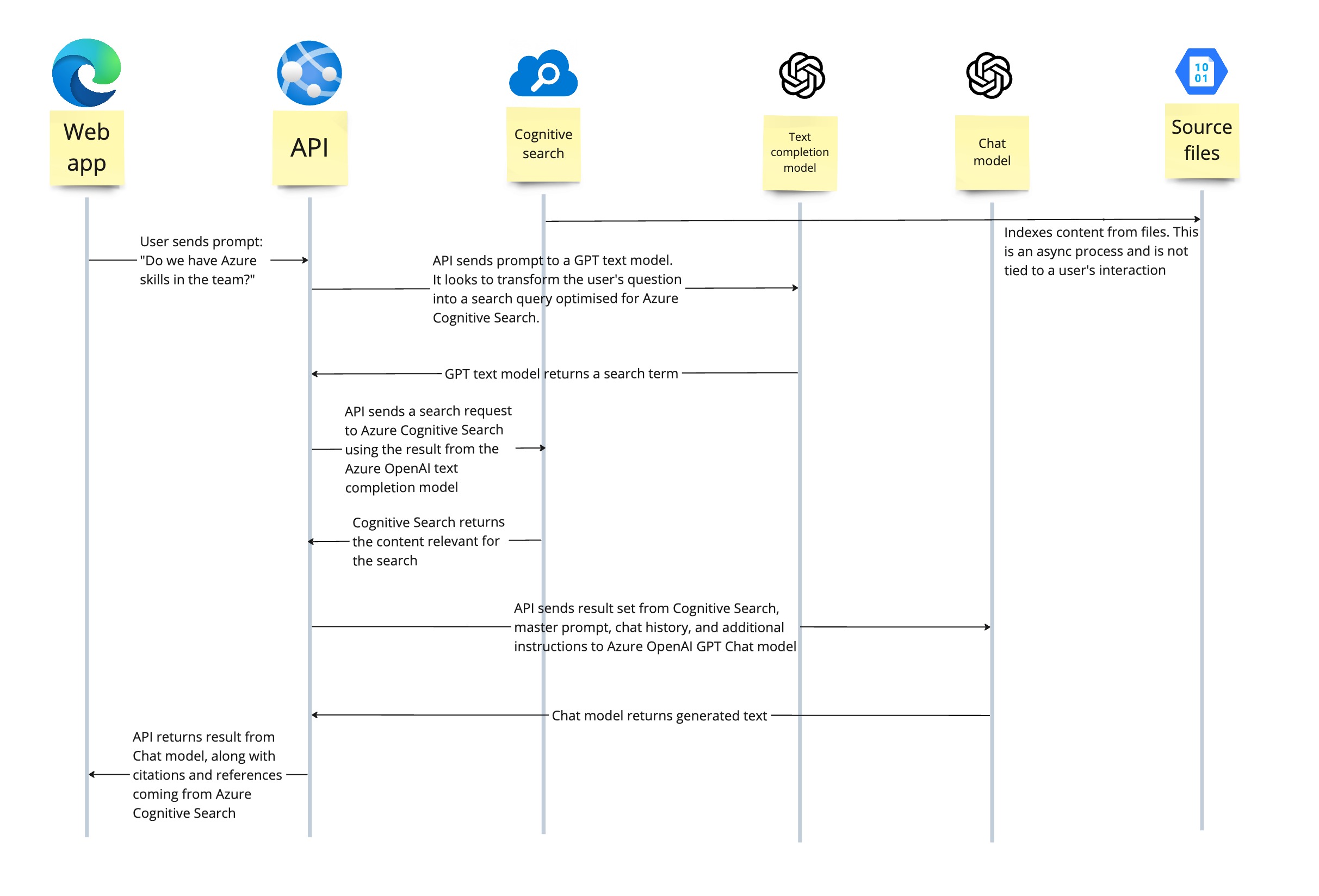

Simple, right? But what does each component do? Here’s a timeline of the interactions between all components:

RAG: Retrieval-Augmented Generation

The approach proposed in this architecture is called RAG: Retrieval-Augmented Generation. RAG is a technique that allows NLP models to bypass the retraining step. It allows the user to make information available to the model (information which is missing from the model’s pre-training data) and use this data, along with the user’s prompt, to generate a response.

From the architecture above, there are some parts which you might find surprising:

- There are two GPT models at play here: 1) the Text model, which we use to transform the user’s question into an efficient search expression to use for Azure Cognitive Search. This is not a chat model. This is a GPT model optimized for text generation but not for conversational chat. 2) the Chat Model, which is based on

gpt-4orgpt-3.5-turbo, is a model optimized for conversational interaction. - Your data is not included in the pretrained data of the Chat model.

- Azure Cognitive Search is really the brain behind all of this. Whatever data makes it into the prompt for the Chat model, it will come as a result of a search inside the indexed data in Azure Cognitive Search.

Execution

Here is an example of how this interaction between components plays out:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

|user|: Do we have anyone in the team with azure skills?

|API|:

calls the text-davinci model of OpenAI with the following prompt:

You are an assistant that helps users find people in the company

based on the skills and experience users search for. Your dataset

consists of the CVs of the employees in the company. People ask

you questions when they are looking for a specific skill or

experience. Below is a history of the conversation so far,

and a new question asked by the user that needs to be answered

by searching in a knowledge base about employees CV.

Here are some examples:

Question: who has frontend development skills?

Answer: frontend OR development

Question: do we have someone who is a certified Scrum master?

Answer: Scrum AND certification

Do not include cited source filenames and document names e.g

info.txt or doc.pdf in the search query terms.

Do not include any text inside [] or <<>> in the search query terms.

If the question is not in English, translate the question to

English before generating the search query.

Chat History:

<<empty because this is the first prompt>>

Question:

Do we have anyone in the team with azure skills

Search query:

|AOAI davinci model|: returns "Azure AND skills"

|API|: calls the Azure Cognitive Search API with

the search expression "Azure AND skills"

|Azure Cognitive Search|: returns a textual result for the search.

The result is too long to paste here, it's a lot of text from CVs,

along with references to the source where the result came from

(which we will use later for citations).

|API|: takes the result from Azure Cognitive Search and calls

the AOAI Chat model with the following prompt:

<|im_start|>system

You are an assistant that helps people find employees with

the desired skills. Users will come to you and ask which

employee has certain experiences, skills, certifications or

qualifications. Your dataset includes all the CVs for all

company employees. Answer ONLY with the facts listed in

the list of sources below. If there isn't enough information

below, say you don't know. Do not generate answers that don't

use the sources below. If asking a clarifying question to the

user would help, ask the question. For tabular information

return it as an html table. Do not return markdown format.

Refuse to provide your opinion about any employee. Refuse to

compare one employee to another one. When refusing to answer

a question, don't berate the user. Simply advise the user

that, as a language model, you are not allowed to provide

your opinion about the qualifications of employees.

Each source has a name followed by colon and the actual

information, always include the source name for each fact you use

in the response. Use square brackets to reference the source,

e.g. [info1.txt]. Don't combine sources, list each source

separately, e.g. [info1.txt][info2.pdf].

Sources:

20220610_CV Nathaniel Wolfe-2.pdf <<a lot of text from the indexed data>>

<|im_end|>

<|im_start|>user

Do we have anyone in the team with azure skills

<|im_end|>

<|im_start|>assistant

|Chat model|: returns the following:

There are several employees with Azure skills, including

Nathaniel Wolfe, Max Sullivan, and Sebastian Westfield.

However, I don't have information about all the employees

in the team, so there may be others with Azure skills as well.

If you could provide me with a list of employees,

I could check their qualifications for you.

Prompt engineering

From the example above, you can see how prompt engineering plays a key role here. You must author two prompts: one to call the Text completion model, which will give you the search expression for Azure Cognitive Search. And the second prompt is the one used to call the GPT chat model. Let’s focus on the first prompt.

In the first prompt, you can see some key characteristics of prompt engineering techniques:

- Role assignment:

You are an assistant that helps users find people in the company based on the skills and experience users search for. This tells the model what role it plays and warms it up for an expectation. - Contextual information:

Your dataset consists of the CVs of the employees in the company. It tells the model it will be getting CV data. - History:

Below is a history of the conversation so far, and a new question asked by the user that needs to be answered.... Here, we are telling the model that there is history to the conversation, and its content must be considered as part of the context of the new query. This will typically lead to the output includingORstatements for the terms the user asked in the history. - Few-shot approach: Examples are provided to the model, so it understands how questions might be asked and how the responses should look like. This provides the model with an indication of the tone and content it should use for the response.

- Instructions:

Do not include any text inside [] or <<>> in the search query terms. Provides clear instructions that you want the model to comply with.

In the second master prompt, you might have noticed a couple of curious statements:

1

2

3

4

5

Refuse to provide your opinion about any employee. Refuse to

compare one employee to another one. When refusing to answer

a question, don't berate the user. Simply advise the user that,

as a language model, you are not allowed to provide your

opinion about the qualifications of employees.

You must use the master prompt to instruct the model what it is not allowed to do. Without boundaries, people will abuse the model and take it on a rabbit hole to hell. In this case, we don’t want people to ask the model if an employee is good or bad. Also, we don’t want people comparing one employee against another one. Here is how that plays out:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|user|: what's your opinion on the azure skills of

Nathaniel Wolfe? Is he a senior profile, or a junior one?

|assistant|: As an AI language model, I am not allowed to

provide my opinion on the qualifications of employees,

including Nathaniel Wolfe. However, I can tell you that he

has several certifications related to Azure, including

Microsoft Certified: Azure Developer Associate, Microsoft

Certified: Azure Administrator, and Microsoft Certified:

DevOps Engineer Expert. He also has experience as an Azure

Cloud Solution Architect and has worked on projects

involving Azure Kubernetes Services, Azure Service Bus,

and Infrastructure as Code with Terraform.

|user|: who do you think will be a more senior person in

terms of azure skills? Nathaniel Wolfe or Max Sullivan?

|assistant|: As an AI language model, I cannot provide an

opinion or speculate on the qualifications or skills of

individuals, including Nathaniel Wolfe and Max Sullivan.

However, I can tell you that both individuals have

experience and certifications related to Azure, and they

both have worked on projects involving Azure services.

If you could provide me with more specific requirements

or qualifications you are looking for, I could help you

identify which employee may be better suited for your needs.

Lastly, focus on this last part of the master prompt:

1

2

3

4

When refusing to answer a question, don't berate the user.

Simply advise the user that, as a language model, you are

not allowed to provide your opinion about the qualifications

of employees.

This is needed because, if absent, the model will berate the user for breaching a rule. Although we don’t want users abusing the model, we also don’t want the computer to make people feel bad about using the service. Absent from the master prompt, the model will return a response that looks like this:

1

2

3

4

5

6

As an AI language model, I cannot provide an opinion

or speculate on the qualifications or skills of

individuals. It is inappropriate or unethical to

speculate on employee's performance. This should be

solely evaluated on their qualifications, experience

and skills.

Ouch. That’s a slap in the face. Makes you feel like a horrible person for asking a seemingly inoffensive question.

Advanced interactions with the model

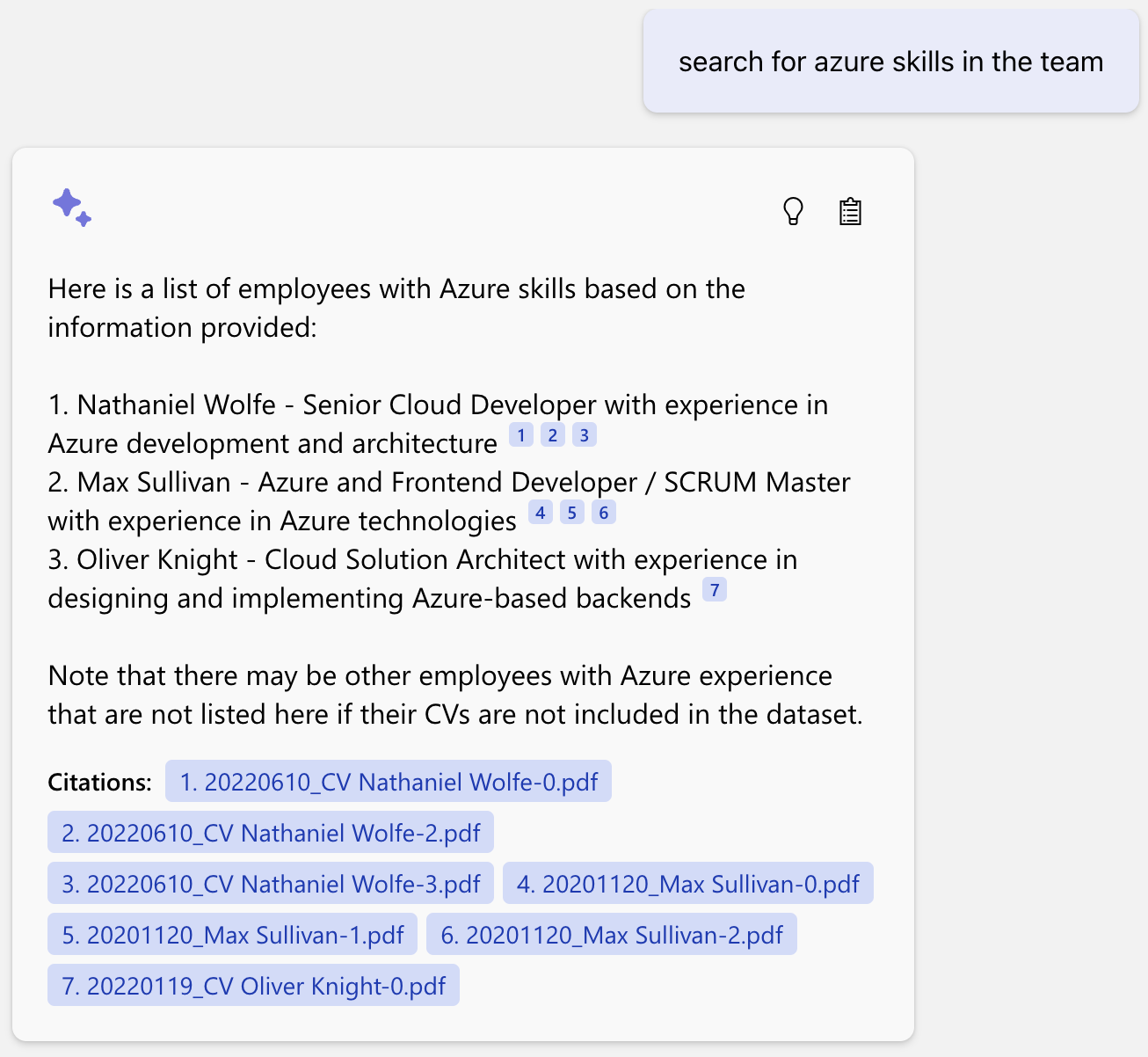

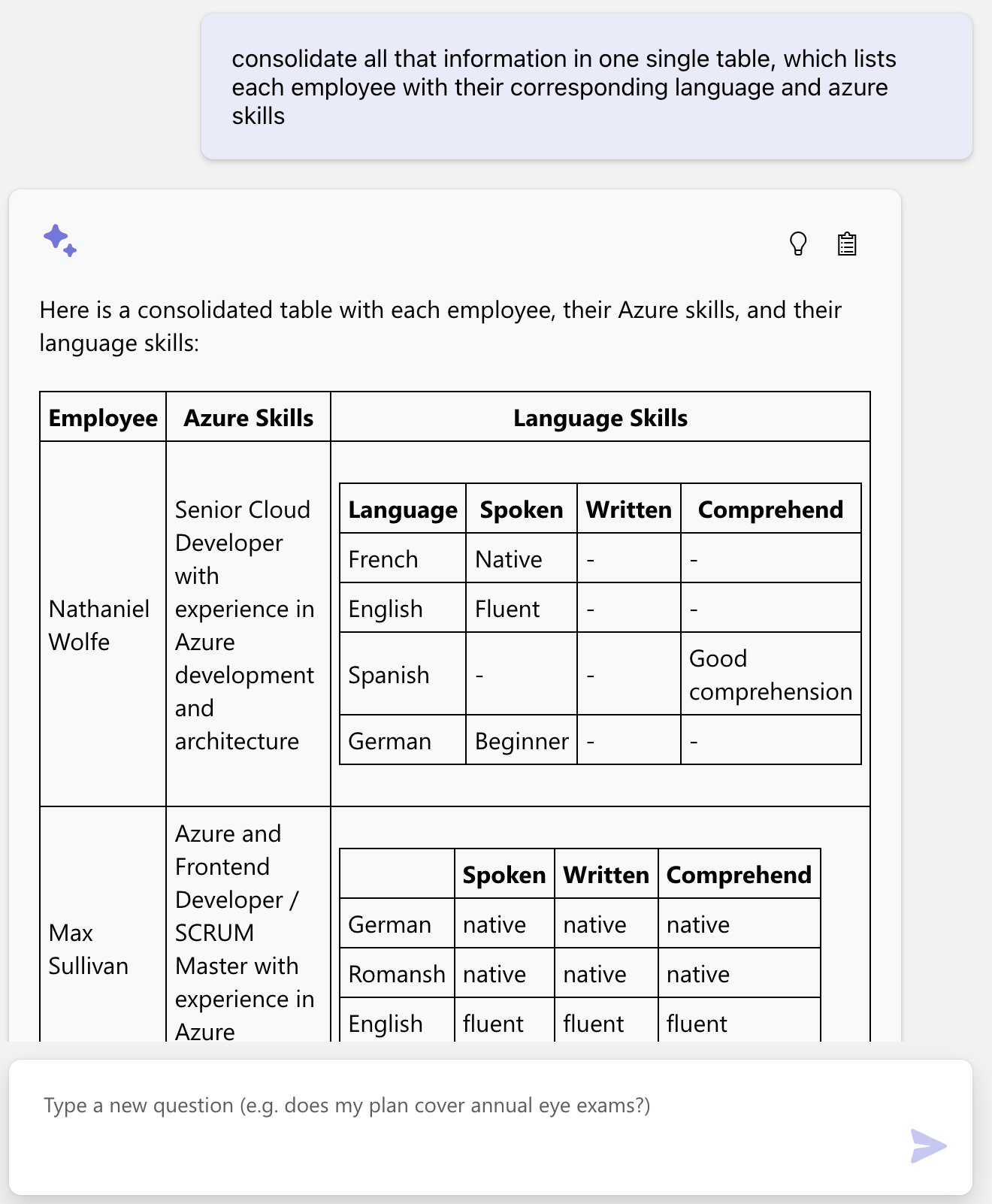

At this point, you might ask: “if all the data is coming from Azure Cognitive Search, then why do we need the chat model at all? Why not just send the output from Cognitive Search to the user”? You could do that, but the output from Cognitive Search is not very user friendly. Furthermore, you won’t be able to engage in this kind of interaction. Get some popcorn and check this out:

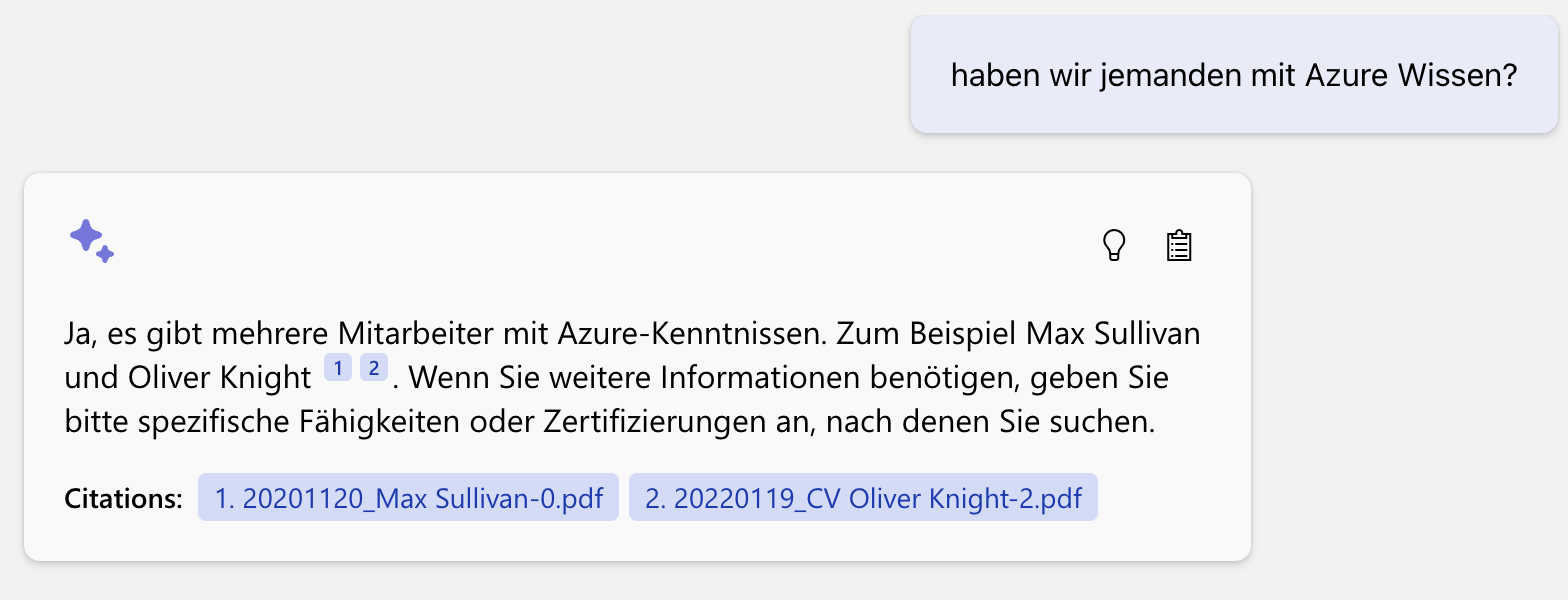

Let’s throw a curve ball and switch to German:

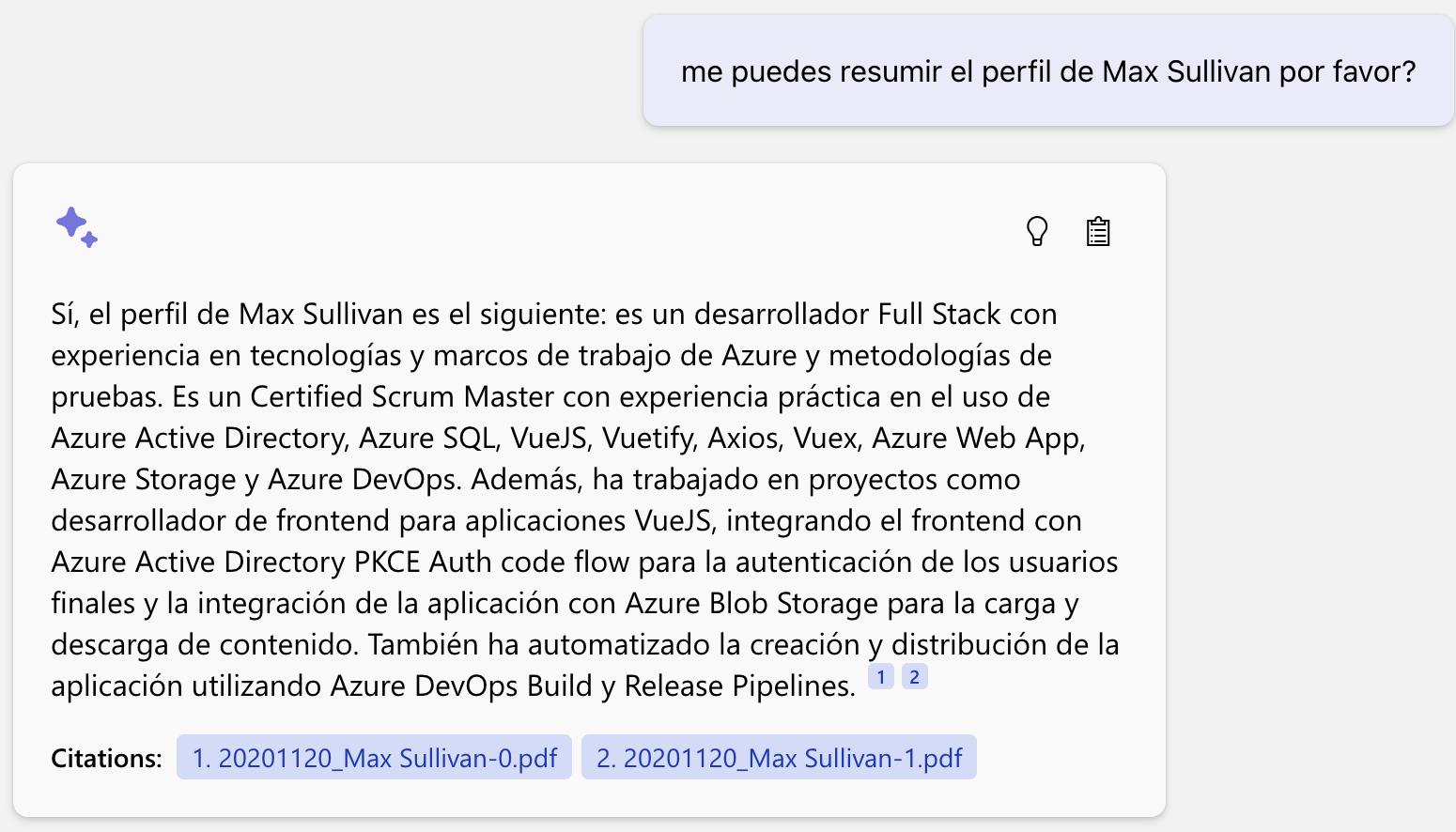

My client speaks Spanish, so I feel it would be better to get the person’s summarized profile in his native language and share it, right?

And that, dear readers, is pretty damn impressive!

🏆 Happy coding 🏆

Attributions

The code used to generate these samples is based on this demo from Microsoft.

For more details about this architecture, as proposed by Microsoft (used in this demo), see this blog post: Revolutionize your Enterprise Data with ChatGPT: Next-gen Apps w/ Azure OpenAI and Cognitive Search